Path2Response Product Management Knowledge Base

Welcome to the comprehensive knowledge base for product management at Path2Response.

About This Book

This knowledge base serves as the central resource for:

- New PM onboarding - Everything a new Product Manager needs to understand Path2Response

- CTO’s interim PM responsibilities - Reference for current product management work

- Claude Code as PM assistant - Context for AI-assisted product development

- Institutional knowledge preservation - Documentation that persists beyond individuals

About Path2Response

Path2Response is a data cooperative specializing in direct mail marketing with growing digital audience capabilities. Founded ~2015 and headquartered in Broomfield, CO.

2025 Theme: “Performance, Performance, Performance”

Key Strategic Shift: Moving toward digital-first products — onboarding proprietary audiences into ad tech ecosystems like LiveRamp and The Trade Desk.

Core Products

| Product | Description |

|---|---|

| Path2Acquisition | Custom audience creation (primary product) |

| Path2Ignite | Performance-driven direct mail |

| Digital Audiences | 300+ pre-built segments |

| Path2Contact | Reverse email append |

| Path2Optimize | Data enrichment |

| Path2Linkage | Data partnerships |

Leadership

- Brian Rainey - CEO

- Karin Eisenmenger - COO (Product Owner for PROD)

- Michael Pelikan - CTO (Engineering leadership)

- Phil Hoey - CFO

How to Use This Book

Getting Started

If you’re new to Path2Response, start with:

- Solution Overview - Core concepts and company identity

- Path2Acquisition Flow - How our primary product works

- Glossary - 100+ term definitions

For Product Managers

Key processes to understand:

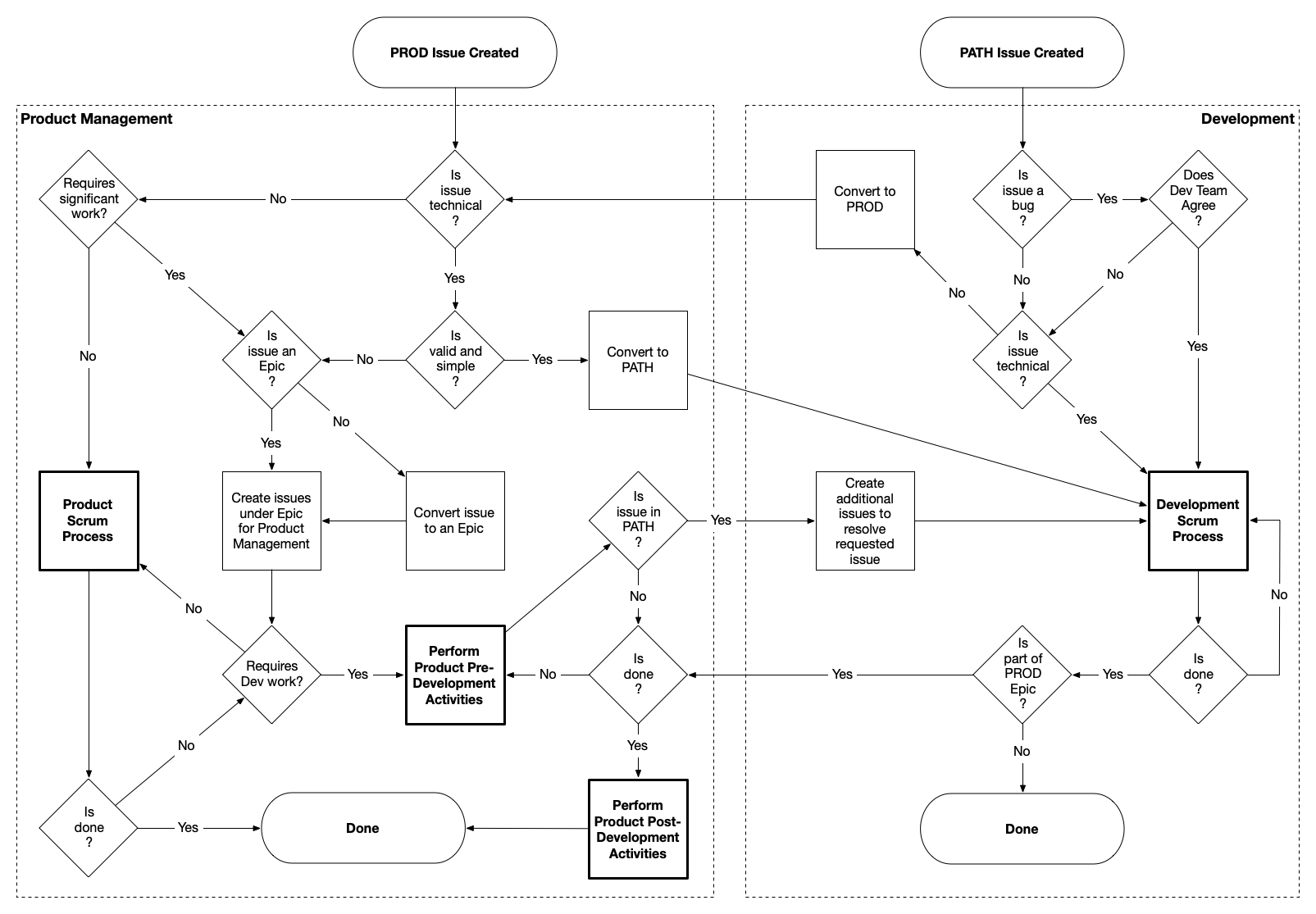

- PROD-PATH Process - How Product and Development work together

- Stage-Gate Process - Initiative lifecycle (6 stages, 5 gates)

- Work Classification - Initiative vs Non-Initiative vs Operational

For Technical Understanding

System documentation in priority order:

- BERT Platform - Our unified backend (migration target)

- Project Inventory - Complete inventory of 177 repositories

- coop-scala - Core Spark data processing

For Industry Context

Understanding direct mail marketing:

- Direct Mail Fundamentals - Value chain and economics

- Data Cooperatives - How co-ops work

- Competitive Landscape - Epsilon, Wiland, etc.

Key Concepts

PROD vs PATH

| Project | Owner | Purpose |

|---|---|---|

| PROD | Karin (COO) | Product management — the “what” and “why” |

| PATH | John Malone | Development — the “how” |

Work flows from PROD → PATH at Gate 2 when requirements are complete.

Work Classification

| Category | Description |

|---|---|

| Initiative | Strategic projects, new products, exec-requested |

| Non-Initiative | Enhancements, medium features |

| Operational | Bug fixes, maintenance, small tweaks |

This knowledge base is maintained by the CTO with Claude Code assistance. Last updated: January 2026.

Path2Response Solution Overview

Source: New Employee Back-Office Orientation (2025-08)

Company Identity

Path2Response is a data cooperative specializing in customer acquisition through direct mail marketing, with expanding digital capabilities.

- Parent Company: Caveance (holding company)

- Taglines: Marketing updates taglines regularly; recent examples include “Marketing, Reimagined” and “Innovation and Data Drive Results”

- Mission: Foster a transparent culture of relentless innovation and excellence that empowers employees to flourish.

- Goal: Create great products that perform for our clients.

What We Do

“We are a data driven team that engages with brands who strive to provide relevant marketing to consumers. Path2Response is comprised of data visionaries and cutting edge technologists. Together, we are blazing a path to help our clients acquire new customers. We actively partner with our clients to provide innovative, data based solutions that allow them to create and maintain profitable relationships with their customers.”

Core Concepts

What is a Data Cooperative?

A data co-op is a group organized for sharing pooled data from consumers between two or more companies. Members contribute their transaction data and gain access to the cooperative’s combined data assets for prospecting.

What is Direct Mail?

Direct mail marketing is any physical correspondence sent to consumers in the hopes of getting them to patronize a client. This includes catalogs, postcards, letters, and other physical marketing materials.

RFM - The Foundation of Response Modeling

| Component | Description |

|---|---|

| Recency | How recently did someone make a purchase? |

| Frequency | How often do they purchase? |

| Monetary | How much do they spend? |

RFM analysis is fundamental to predicting consumer response behavior.

Core Product: Path2Acquisition

While Path2Response has various solution offerings, we focus on a single product: Path2Acquisition.

The other solutions are all variations on this product, focusing on particular needs and verticals:

- Path2Ignite - Performance-driven direct mail

- Digital Audiences - 300+ segments for digital channels

- Path2Contact - Reverse email append

- Path2Optimize - Data enrichment

- Path2Linkage - Data partnerships

Verticals We Serve

| Vertical | Description | Terminology |

|---|---|---|

| Catalog | Traditional catalog retailers | Customers, orders, purchases |

| Nonprofit | Charitable organizations | Donors, donations, gifts |

| Direct-To-Consumer (DTC) | Brands selling directly to consumers | Varies by brand |

| Non Participating | Companies using P2R data but not contributing | Limited access |

Important: Terms used throughout the system are Catalog-centric. Other verticals use equivalent terminology (e.g., “donors” instead of “customers” for Nonprofit).

The Path2Acquisition Flow

See Path2Acquisition Data Flow for the complete solution architecture diagram and detailed explanation.

Campaign Timeline

| Phase | Duration |

|---|---|

| Full campaign lifecycle | Many months |

| Merge Cutoff to Mailing | Weeks |

| Mail Date to Results | Several months |

This extended timeline is critical for understanding why response analysis and match-back processes span long periods.

Related Documentation

- Path2Acquisition Data Flow - Detailed solution architecture

- Glossary - Terms and definitions

- Products & Services - Complete product portfolio

Path2Acquisition Data Flow

Source: New Employee Back-Office Orientation (2025-08), Page 2 Diagram

This document explains the complete Path2Acquisition solution flow, from client onboarding through response analysis.

Flow Diagram Overview

The Path2Acquisition system processes data through several major stages:

┌─────────────┐ ┌─────────────┐ ┌─────────────┐ ┌─────────────┐

│ CLIENT │ → │ DATA │ → │ MODELING │ → │ DELIVERY │

│ INTAKE │ │ PROCESSING │ │ & SCORING │ │ & RESULTS │

└─────────────┘ └─────────────┘ └─────────────┘ └─────────────┘

Stage 1: Client Intake & Order Entry

Client Hierarchy

Broker

└── Client

└── Brand

├── Parent

│ └── Title ←── House File (via SFTP)

└── Sibling

| Term | Definition |

|---|---|

| Broker | List broker who represents the client |

| Client | The company purchasing prospecting services |

| Brand | A brand within the client’s portfolio |

| Title | A specific catalog or mailing program (e.g., “McGuckin Hardware”) |

| Parent/Sibling | Related titles within the same brand family |

| House File | Client’s existing customer database |

Order Process

- Order initiates the campaign

- Order App manages order entry and configuration

- Order Processing validates and routes the order

Transaction Types

| Type | Description |

|---|---|

| Complete/Replacement | Full file refresh replacing previous data |

| Incremental | New transactions added to existing data |

Stage 2: Data Ingestion & Processing

House File Processing

House File → CASS → Convert → Transactions → Data Attributes

↓

DPV, DPBC

| Process | Purpose |

|---|---|

| CASS | Coding Accuracy Support System - standardizes addresses |

| DPV | Delivery Point Validation - confirms address deliverability |

| DPBC | Delivery Point Bar Code - adds postal barcodes |

| NCOA | National Change of Address - updates moved addresses |

| Convert | Transforms data into standard format and layout |

Transaction Storage

- Transactions stored in JSON format

- Title Key links transactions to specific titles

- Data Tools provide processing utilities

Data Enrichment

- BIGDBM - External data provider for additional attributes

- Data Attributes - Enhanced consumer data points

Stage 3: Digital Data Integration

First-Party Data Sources

Site Tag ─┬─ Cookie

└─ Pixel

↓

Site Visitors → Extract → Individuals → Identities

↑

Hashed Email (HEM)

├── MD5

├── SHA-1

└── SHA-2

| Source | Description |

|---|---|

| Site Tag | JavaScript tracking code on client websites |

| Cookie | Browser-based visitor identification |

| Pixel | Image-based tracking for email/web |

| Site Visitors | Aggregated visitor behavior data |

| Hashed Email (HEM) | Privacy-safe email identifiers |

| First-Party Data | Data collected directly by the client |

Identity Resolution

- Extract process with CPRA compliance blocks

- Links digital Individuals to postal Identities

- Bridges online behavior to offline marketing

Stage 4: Householding

Process Flow

Individuals → Householding → Households File → Preselect

↓

┌────────┴────────┐

↓ ↓

Addresses Names

↓ ↓

DMA Deceased

Pander

| Process | Purpose |

|---|---|

| Householding | Groups individuals into household units |

| DMA | Direct Marketing Association preference processing |

| Deceased | Removes deceased individuals |

| Pander | Removes those on “do not contact” lists |

| Households File | Master file of valid households with IDs |

Output Branches

- Digital Solutions - For digital channel delivery

- Other Products - Path2Contact, Path2Optimize, etc.

- License Files - Data licensing arrangements

Stage 5: Title Responders & BERT

Title Responders

Identifies individuals who have responded to specific titles (catalogs/mailings).

BERT System

Order → BERT → Variables → Modeling

↑

Order App

Order Processing

BERT (Base Environment for Re-tooled Technology) is the unified backend application platform that consolidates internal tools (Order App, Data Tools, Dashboards, Response Analysis) into a single application. Within the Path2Acquisition flow, BERT:

- Manages order entry and processing (via Order App module)

- Generates modeling variables

- Feeds the scoring engine

Stage 6: Preselect & Universe Building

Preselect Process

Households File → Preselect → Universe

↓

┌───────┴───────┐

↓ ↓

Responder Prospect

| Term | Definition |

|---|---|

| Preselect | Defines the available universe for selection |

| Universe | Total pool of potential mail recipients |

| Responder | Someone who has responded to the title before |

| Prospect | Someone who has not yet responded to the title |

Synergistic Titles

Identifies related titles whose responders might respond well to the target title.

Example:

- Title: McGuckin Hardware

- Synergistic Titles: Home Depot, Lowes

- Question: What other titles would buyers of McGuckin also purchase from?

Suppressions

| Suppression | Meaning |

|---|---|

| DNM | Do Not Mail - complete mail suppression |

| DNR | Do Not Rent - cannot rent this name to others |

| Omits | Specific records to exclude |

| Suppressions | Categorical exclusions |

Stage 7: Modeling & Scoring

Model Types

Variables → Modeling → Score File

↓

┌──────┴──────┐

↓ ↓

Affinity Propensity

| Model Type | Purpose |

|---|---|

| Affinity | Likelihood to be interested in product category |

| Propensity | Likelihood to respond to specific offer |

Score File Output

- Segments - Grouped by score ranges (deciles, quintiles)

- Used to prioritize mail recipients by predicted response

Stage 8: Fulfillment

Process Flow

Score File → Fulfillment → Fulfillment File → Shipment

↓

Nth-ing

| Term | Definition |

|---|---|

| Fulfillment | Preparing the final mail file |

| Nth-ing | Statistical sampling (every Nth record) for testing |

| Fulfillment File | Final file ready for production |

Stage 9: Shipment & Merge/Purge

Shipment to Service Bureau

Fulfillment File → Shipment → Service Bureau → Mail File

↓

Merge / Purge

┌─────┴─────┐

↓ ↓

Hits Nets

| Term | Definition |

|---|---|

| Service Bureau | Third-party mail production facility |

| Merge/Purge | Combines lists and removes duplicates |

| Hits | Records that matched (duplicates found) |

| Nets | Unique records after deduplication |

| Computer Validation | Final address verification |

Merge Cutoff

The deadline for including names in the mailing. After this date, the file is locked for production.

Mail File Components

| Component | Purpose |

|---|---|

| Keycode | Unique identifier for tracking response source |

| Lists of Lists | Source attribution for each name |

| Multis | Names appearing on multiple lists |

Stage 10: Mailing

Output

Mail File → In-House / In-Home → Mailing

↓

Mail Date

| Term | Definition |

|---|---|

| In-House | Mailing arrives at household |

| In-Home | Consumer has opened/seen the mailing |

| Mail Date | Date the mailing enters the postal system |

Stage 11: Response Analysis

Tracking Response

Mailing → Transaction → Response Analysis → Results

↓ ↓

Response ┌────────┴────────┐

↓ ↓ ↓

Match Back Response Rate Client Results

& RR Index

Match Back Process

Links responses (purchases) back to the original mailing to measure effectiveness.

Key Metrics

| Metric | Description |

|---|---|

| Response Rate | Percentage of recipients who responded |

| RR Index | Response rate indexed against baseline |

| AOV | Average Order Value |

| $ Per Book | Revenue generated per catalog mailed |

| $ / Book Index | Revenue indexed against baseline |

Final Output

- Client Results - Performance report for the client

- Recommendation - Guidance for future mailings

Campaign Timeline Summary

Order → [Weeks] → Merge Cutoff → [Weeks] → Mail Date → [Months] → Results

↑ ↓

└───────────────── Campaign Cycle ──────────────────────────┘

(Many Months)

| Phase | Duration |

|---|---|

| Order to Merge Cutoff | Varies (planning, data prep) |

| Merge Cutoff to Mail Date | Weeks (production) |

| Mail Date to Results | Several months (response window) |

| Full Campaign | Many months |

System Components Reference

| System | Function |

|---|---|

| BERT | Base Environment for Re-tooled Technology - unified backend application platform |

| Order App | Order entry and management (module within BERT) |

| Data Tools | Data processing utilities (module within BERT) |

| Dashboards | Reporting and analytics (module within BERT) |

| BIGDBM | External data enrichment provider |

Related Documentation

- Solution Overview - High-level company and product overview

- Glossary - Complete term definitions

- Products & Services - Product portfolio details

Path2Response Glossary

Source: New Employee Back-Office Orientation (2025-08) and internal documentation

This glossary defines key terms used throughout Path2Response systems and processes. For industry context, see the Industry Knowledge Base.

Contributing

When researching projects, watch for undefined terms:

- During research - Note unfamiliar acronyms or domain terms

- Check glossary - See if term is already defined

- Add if missing - Include definition with source context

- Format - Bold term, followed by definition paragraph

Example addition:

**PBT (Probability by Tier)**

A model quality metric showing the probability distribution across decile segments. Better models show deeper differentiation between high and low segments.

Recent additions: XGBoost, P2A3XGB, FTB, MPT, Future Projections, Hotline Scoring, ARA, ERR, MGR, Encoded Windowed Variables, Responder Interval (Jan 2026, from DS documentation); DRAX, Orders Legacy, Shiny Reports (Jan 2026, from BERT documentation); PBT (Jan 2026, from MCMC project); Convert Type, Data Bearing, Globalblock, Disabled Title, Parent Title, File Type Tag, Transaction Type, Data Call (Jan 2026, from Data Acquisition SOP)

A

Affinity A model type measuring likelihood of interest in a product category based on behavioral patterns.

Anonymous Visitor A website visitor who is not logged in or otherwise identified by the brand. PDDM solutions like Path2Ignite can match anonymous visitors to postal addresses through co-op data matching, enabling direct mail retargeting.

AOV (Average Order Value) The average dollar amount per order, used to measure campaign profitability.

ARA (Automated Response Analysis) Data Science tool that measures campaign performance by matching responses (purchases/donations) back to mailed households. Provides reports via a Dash app.

AUC (Area Under Curve) Model quality metric measuring the area under the ROC curve. High AUC (top 90s) with low responder count indicates overfitting.

AUCPR (Area Under Precision-Recall Curve) Model quality metric, alternative to AUC, particularly useful for imbalanced datasets like response prediction.

Automation Discount USPS postage discount for mail pieces that can be processed by automated equipment. Requires readable barcodes (IMb) and standard dimensions.

B

BERT (Base Environment for Re-tooled Technology) Path2Response’s unified backend application platform that consolidates internal tools into a single application with a common codebase. BERT incorporates Order App, Data Tools, Dashboards, Response Analysis, and other internal applications. Named in August 2023 (submitted by Wells) after Albert Einstein’s pursuit of a unified field theory, with the Sesame Street character enabling a naming convention for related tools (Ernie, Big Bird, Oscar, etc.).

BIGDBM External data provider used for data enrichment and additional consumer attributes.

Best Name In fulfillment processing, the optimal individual/name associated with a household for direct response marketing. Path2Response outputs the “best name” for each household, which may differ from the original name on a client-provided file. This ensures the most likely responder in the household receives the mailing. Used in standard P2A fulfillments and Path2Optimize. See also: Path2Optimize, Householding.

Brand A specific brand within a client’s portfolio. Multiple brands may share a parent client.

Balance Model A post-merge solution that identifies names representing an incremental direct mail audience after the client’s merge/purge process. Provides additional mailing volume for clients with strong pre-merge results.

Three Balance Model solutions:

| Solution | Product | Description |

|---|---|---|

| Modeled Prospects | Path2Acquisition | Custom model for incremental prospects |

| Prospect Site Visitors | — | 30-day prospect site visitors (requires tag) |

| Housefile Site Visitors | Path2Advantage | Housefile visitors (default 30 days) |

Criteria: Strong pre-merge results required (not for mediocre performers), recommended minimum 10M order qty, no significant backend fulfillment processing. Great option for tagged clients.

Turnaround: Same day if file arrives by 9 AM MST (delivery EOD MST); optimal 24 hours.

Positioning:

- Optimal: P2R provides names on frontend (pre-merge) AND backend (post-merge)

- Less than ideal: Backend only (may cause revenue cannibalism)

- Catalog: Lead with Site Visitors, consider higher price point

- Nonprofit: Lead with Path2Acquisition, Site Visitors as options

Revenue: >$550K since 2019 without promotion; 2024 had 23 titles (22 Nonprofit), >$92K revenue, median qty 21,000.

See also: Path2Advantage, Post-Merge, Merge/Purge.

Breakeven The response rate needed for a campaign to cover its costs. Calculated based on campaign costs, average order value, and margin.

Broker See List Broker.

C

Commingling Combining mail from multiple mailers to achieve higher presort densities and better postal discounts. Enables smaller mailers to access discounts normally requiring higher volumes.

Compiled List A mailing list assembled from public and commercial sources (directories, registrations, surveys) rather than actual purchase behavior. Lower cost but typically lower response rates than response lists.

CPM (Cost Per Mille) Cost per thousand - the standard pricing unit for mailing lists. A list priced at $100 CPM costs $100 per 1,000 names.

Campaign A complete direct mail initiative from order through response analysis. Campaigns typically span many months.

CASS (Coding Accuracy Support System) USPS system that standardizes addresses and adds delivery point codes. Required for postal discounts.

Caveance The holding company that owns Path2Response, LLC.

CCPA (California Consumer Privacy Act) California privacy law governing consumer data rights. See also CPRA.

Convert Type In Data Acquisition, the fundamental classification of incoming data files:

- House: Records part of the target title’s house file; suppressed for prospect fulfillments; does not support transactional fields

- Transaction: Records with transactional data that link to house file records via customerId

- Master: Records not associated to a specific target title; affect overall database or modeling (e.g., DMA, select, omit)

See also: File Type Tag, Data Acquisition Overview.

Client A company purchasing prospecting or data services from Path2Response.

Complete/Replacement A transaction file type that fully replaces previous data (as opposed to incremental updates).

Computer Validation Final address verification performed by the service bureau before mailing.

Convert The process of transforming client data into Path2Response’s standard format and layout.

Cookie Browser-based identifier used for tracking website visitors.

CPRA (California Privacy Rights Act) Updated California privacy regulation that supersedes CCPA. Path2Response is compliant.

D

Data Attributes Enhanced consumer data points derived from transaction history and external sources.

Data Bearing (Title) A title that contributes data to the Path2Response cooperative database. Controlled by the “disabled” checkbox in Data Tools. Non-data-bearing titles (disabled) have data that cannot be used in model runs. See also: Disabled Title, Globalblock.

Data Call A client onboarding meeting where Data Acquisition discusses data requirements, file formats, and transfer processes with a new client. New client file analysis SLA is 3 business days with a data call vs 5 business days without one.

Data Broker A business that aggregates consumer information from multiple sources, analyzes/enriches it, and sells or licenses it to third parties. Distinct from list brokers (who facilitate rentals) and data cooperatives (member-based).

Data Cooperative (Data Co-op) A group organized for sharing pooled consumer data between member companies. Members contribute transaction data and gain access to the cooperative’s combined assets. Examples: Path2Response, Epsilon/Abacus, Wiland.

DDU (Destination Delivery Unit) The local post office that handles final mail delivery. Entering mail at DDU level provides the highest postal discount but is logistically complex.

Data Tools Path2Response utilities for data processing and manipulation.

Deceased Suppression process that removes deceased individuals from mail files.

Direct Mail Physical correspondence sent to consumers to encourage patronage of a client.

Disabled (Title) A title status flag in Data Tools indicating the title’s data cannot be used in model runs. Disabled titles will not be selected in preselect. Non-contributing titles should be marked as disabled but NOT marked as DNR/DNM. See also: Data Bearing, Globalblock.

DMA (Direct Marketing Association) Industry organization; also refers to preference processing for consumers who opt out of marketing.

DNM (Do Not Mail) Suppression flag indicating a consumer should not receive mail from any source.

DNR (Do Not Rent) Suppression flag indicating a name cannot be rented to other mailers (but owner can still mail).

DPV (Delivery Point Validation) USPS verification that confirms an address is deliverable.

DPBC (Delivery Point Bar Code) Postal barcode added to mail pieces for sorting efficiency.

Deciles Division of a scored audience into 10 equal groups ranked by predicted response. Decile 1 is highest predicted responders; Decile 10 is lowest. See also Quintiles, Segments.

Direct Mail Agency A full-service marketing firm that manages direct mail campaigns for brands, handling strategy, creative, list selection, production, and analytics. Example: Belardi Wong.

DTC (Direct-To-Consumer) Business model where brands sell directly to consumers rather than through retailers.

Dummy Fulfill / Dummy Ship Internal operational process where a fulfillment is generated but shipped only to Path2Response (orders@path2response.com) rather than the client. Used in Path2Expand workflow to capture the P2A names that need to be excluded from the P2EX universe. After dummy fulfillment, the ImportID is added to Prospect/Score Excludes for the subsequent model. See also: Path2Expand, ImportID.

DRAX Path2Response’s reporting system that replaced the legacy Shiny Reports application. Part of the Sesame Street naming convention for tools related to BERT. Contains two main dashboards:

- Guidance for Preselect Criteria - Summary of client titles, household versions, database growth, housefile reports

- Title Benchmark Report - Summary statistics for variables across titles

E

Encoded Windowed Variables Data Science innovation that compresses multiple time-windowed RFM variables into a single dense variable per title. Solves the problem of windowed variables not making it into the final 300-variable model due to low density. Uses cumulative encoding across windows (30, 60, 90, 180, 365, 720, 9999 days). Resulted in 13-92% increase in unique titles used in final models.

Important: “Encoded Variables” is an internal term only — do not use with clients. This is NOT a new product, new model, or new data source. It’s an enhancement that helps the existing model make better use of existing data. When discussing with clients, frame as improved leverage of recency and synergy signals, which are universally understood terms. See Client Messaging section in data-science-overview.md.

Epsilon/Abacus Major data cooperative competitor (part of Publicis Groupe). Epsilon acquired Abacus Alliance and operates one of the largest marketing data platforms globally.

ERR (Estimated Response Rate) Model metric showing the estimated likelihood a responder will purchase, based on Future Projection and Model Projection. Used to compare different model configurations on the Model Grade Report.

Extract Process that pulls individual-level data from site visitors while applying privacy compliance blocks.

F

First-Party Data Data collected directly by the client from their own customers and website visitors.

Format The structure/layout specification for data files.

Frequency In RFM analysis, how often a consumer makes purchases.

Fulfillment The process of preparing the final mail file for production.

Fulfillment File The completed file ready for shipment to the service bureau.

File Type Tag In Data Acquisition, a classification tag applied to incoming data files that determines how they are processed and converted. Common tags include:

- suppression (house): DNR/DNM housefile names

- transaction (transaction): Housefile transactions only

- complete (transaction): Combined names + transactions

- house (house): Housefile names only

- inquiry (transaction): Catalog requesters

- shipto (house): Ship-to addresses/giftees

- select/omit (master): Files for modeling selection/omission

- mailfile (master): Consumers mailed or to be mailed

- response (master): People who responded to a mailing

See Data Acquisition Overview for complete list.

FTB (First Time Buyer) Model configuration option that limits responders to only those making a purchase for the first time (according to co-op data). Default buyer type for tagged titles.

Future Projections Data Science process to determine the optimal responder interval for model training. Evaluates different responder interval scenarios and delivers comparative correlations between responder intervals and the measurement interval. Key insight: models predict past response, but campaigns need to predict future response.

G-H

Globalblock A client status flag indicating the client’s data is not contributed to the Path2Response cooperative database. Unlike disabled titles (which are loaded but not used in modeling), globalblocked clients have data that is completely excluded from the database. See also: Disabled Title, Data Bearing.

Gross to Net (Gross → Net) The reduction from raw record count to usable record count during file processing. In Path2Optimize, the “gross” count of records on a client-provided file reduces to a “net” count of records that can be matched to qualified individuals/households in the P2R database. Match rates vary based on file quality, recency, accuracy, and duplication. See also: Path2Optimize.

Hashed Email (HEM) Privacy-safe email identifier created through one-way hashing algorithms (MD5, SHA-1, SHA-2).

Holdout Panel A control group that does not receive a mailing, used to measure the incremental impact of a campaign. Results are compared between mailed and holdout groups to calculate true lift.

Hits In merge/purge, records that matched across multiple lists (duplicates found).

House File A client’s existing customer database, typically provided via SFTP.

Householding Process of grouping individuals into household units to prevent duplicate mailings to the same address.

Households File Master file of valid, deduplicated households with unique IDs.

Hotline Scoring Simple regression model that scores prospects with browse data at the target title. Used for programmatic direct mail (PDDM, Swift) and to incorporate browsers for titles whose tag is too new for XGBoost.

I

ID Unique identifier assigned to households or individuals in the system.

Identities Resolved identity records linking digital behavior to postal addresses.

In-Home When a mailing has been delivered and the consumer has seen/opened it.

In-House When a mailing has arrived at the household.

Incremental A transaction file type that adds new records to existing data (as opposed to complete replacement).

Individuals Person-level records extracted from site visitor data.

Intent Signal Behavioral data indicating purchase intent, such as cart abandonment, pages visited, time on site, or order placed. Used in PDDM to identify and prioritize high-value prospects.

IMb (Intelligent Mail Barcode) USPS barcode standard required for automation discounts. Contains routing information and unique piece identifiers for tracking.

ImportID Unique identifier for a fulfillment file. Used in Path2Expand workflow to exclude P2A fulfillment names from the P2EX universe by adding the ImportID to the Prospect/Score Excludes field. See also: Path2Expand, Dummy Fulfill.

Incoming Routes

SFTP paths configured in Data Tools for receiving client data files. Routes should be set under the parent title even if the parent::child duplicate is not data bearing. For example, “Vionic” incoming routes are set under “Caleres” (the parent). The parent can be identified from the <parent::child> syntax in dropdown searches.

J-K

JSON Data format used for storing transaction records.

Keycode Unique identifier printed on mail pieces to track response source.

L

Layout The field structure and format specification for data files.

Lettershop A facility specializing in mail piece assembly and preparation, including inserting, tabbing, labeling, and sorting. Often part of a service bureau.

License Files Data provided through licensing arrangements rather than cooperative membership.

Lift The improvement in response rate achieved through modeling or optimization. Example: “Co-op optimization delivered 25% lift over unscored names.”

LTV (Lifetime Value) The total revenue a customer is expected to generate over their entire relationship with a brand. PDDM campaigns aim to acquire customers with higher LTV and increase LTV of existing customers through retention.

List Broker A specialist who helps mailers find and rent appropriate mailing lists. Earns commission (~20%) from list managers. No additional cost to mailer for using a broker.

List Exchange A barter arrangement where two organizations trade one-time access to their mailing lists, often at no cost or minimal processing fees.

List Manager A company that represents list owners, handling rentals, maintenance, promotion, and compliance monitoring (including seed name tracking).

List Owner An organization that owns customer/subscriber data available for rental (e.g., magazines, catalogs, nonprofits).

List Rental One-time use of a mailing list owned by another organization. Names cannot be reused without paying again. Lists typically include seed names to verify compliance.

Lists of Lists Attribution showing which source list(s) each name originated from.

M

Marketing Mail USPS mail class (formerly Standard Mail) used for advertising, catalogs, and promotions. Lower cost than First Class but slower delivery (3-10 days).

Mail Date The date a mailing enters the postal system.

Mail File The final, production-ready file sent for printing and mailing.

Mailing The actual direct mail campaign sent to consumers.

Match Back Process of linking responses (purchases) back to the original mailing to measure effectiveness.

MD5 Hashing algorithm used for creating hashed email identifiers.

Merge Cutoff Deadline for including names in a mailing. After this date, the file is locked for production.

Merge/Purge Process of combining multiple lists and removing duplicates.

Modeling Statistical analysis to predict consumer response behavior.

Monetary In RFM analysis, how much a consumer spends.

MGR (Model Grade Report) A Data Science report containing key model quality metrics: AUC, AUCPR, and ERR. Used to evaluate whether a model is performing well before deployment. High AUC values (90s) with few responders may indicate overfitting. See also: AUC, AUCPR, ERR, PBT.

MPT (Model Parameter Tuning) Process of determining the best preselect criteria and model settings to achieve the optimal estimated response rate (ERR). Steps include: setting circulation size, running Future Projections, determining responder interval, comparing prospecting types via 5% models. Future Projections must complete before 5% models are run. See also: Future Projections, ERR.

Multi-Buyer See Multis.

Multis Names appearing on multiple source lists, identified during merge/purge. Multi-buyers typically show higher response rates and are often treated as a premium segment.

N

Names Consumer name data within the householding process.

NCOA (National Change of Address) USPS database used to update addresses for consumers who have moved. Typically covers 48 months of address changes.

NDC (Network Distribution Center) Large regional USPS facilities that serve as hubs for mail processing. NDC destination entry discounts were eliminated in July 2025.

Nets Unique records remaining after merge/purge deduplication.

Nonprofit Vertical serving charitable organizations. Uses terminology like “donors,” “donations,” “gifts.”

Nth-ing Statistical sampling technique selecting every Nth record, typically for testing.

O

Omits Specific records to exclude from a mailing.

Order The initial request that initiates a campaign.

Order App System for order entry and campaign management. Now a module within BERT. The legacy deployment (“Orders Legacy”) remains operational while final functionality (Shipment) is ported to BERT.

Order Processing Validation and routing of orders through the system.

Orders Legacy The original standalone Order App deployment on EC2. Being phased out as functionality is migrated to BERT. Shipment is the last remaining functionality to port; once complete, Orders Legacy will be decommissioned.

P

Pander Suppression list of consumers who have requested not to be contacted.

Parent (Title)

A primary title that has related sibling/child titles. Clients with multiple titles should have a parent title identical to the client name. Data contacts, incoming routes, and fulfillment routes should be set under the parent title. Child titles use the syntax <parent::child> in Data Tools dropdowns. Example: “Vionic” is a child under “Caleres” (parent). See also: Sibling, Incoming Routes.

Path2Acquisition Path2Response’s core product for customer acquisition through prospecting. Uses XGBoost classification models (P2A3XGB) to predict response likelihood. Can be used in Balance Model position (post-merge) to provide incremental prospects. See also: P2A3XGB, Balance Model.

Path2Advantage Path2Response’s housefile site visitor product. Identifies existing customers who have recently visited the client’s website, enabling re-engagement campaigns. Used in Balance Model position (post-merge) to provide incremental housefile names. Default window is last 30 days. Potential limitation: may generate small quantities for some titles. Requires client to have a tracking tag on their website.

Path2Expand (P2EX) Product that provides incremental prospect names beyond the primary Path2Acquisition universe. Cannot be run standalone — Path2Acquisition must always run first in the order.

How it works:

- Run Path2Acquisition model normally (MPT, 5% counts, front-end omits)

- P2A names are excluded from P2EX universe (via ImportID in Prospect/Score Excludes)

- P2EX uses same model parameters as P2A (cloned model)

- When fulfilling both models, P2A is Fulfillment Priority #1

Example: Client orders 250K from P2A model with segment size 100K. Dummy fulfill/ship 300K to omit on frontend of P2EX model, then P2EX provides additional names beyond P2A.

Key constraint: Model parameters must NOT be changed between P2A and P2EX — only the Prospect/Score Excludes field is modified.

See also: Path2Acquisition, Balance Model.

Path2Ignite Path2Response’s Performance-Driven Direct Mail (PDDM) product. Combines site visitor identification with co-op transaction data for triggered/programmatic direct mail with 24-48 hour turnaround. Can be white-labeled by agencies. See also Path2Ignite Boost, Path2Ignite Ultimate.

Path2Ignite Boost Path2Ignite variant that delivers top Path2Acquisition modeled prospects daily. Model is rebuilt weekly with fresh data. Audiences are incremental to (not duplicates of) site visitor-based Path2Ignite audiences.

Path2Ignite Ultimate Path2Ignite variant combining both site visitor-based targeting (Path2Ignite) and model-based targeting (Boost) for maximum daily growth potential.

Path2Optimize (P2O) Data enrichment product that scores and optimizes client-provided files with minimal transactional data. Available for Contributing and non-Participating brands.

How it works:

- Client provides file (leads, compiled lists, retail data, lapsed customers, etc.)

- P2R matches records to qualified individuals/households in database (“Gross → Net”)

- Net identified records become the scoring universe

- P2A3XGB model scores records using client’s own responders (if available) or proxy responders from similar category titles

- Output: Optimized name/address, original client ID, segment/tier indicator

Common file types optimized:

- Sweeps/giveaway entries

- Top-of-funnel leads (limited data elements)

- Compiled/rental lists purchased from other brands

- Retail store location data

- Lapsed customer files

- Cross-sell files from other titles within parent company

- Housefile portions needing optimization

Key concepts:

- Gross → Net: Raw file count reduces to subset that matches P2R database; varies by file quality

- Best Name logic: Outputs optimal individual for direct response in household (may differ from original name)

- June 2025 enhancement: Can now return client-provided ID (CustID, DonorID) with fulfillments

See also: P2A3XGB, Best Name, Proxy Responders.

PBT (Probability by Tier) A model quality visualization showing the predicted probability of response across 10 equal-sized tiers (segments). The graph uses box plots to display the distribution of probabilities within each tier. A good PBT graph shows a smooth decline from tier 1 (highest probability) to tier 10 (lowest probability). Key elements:

- X-axis: Tiers 1-10 (segments ranked by predicted response probability)

- Y-axis: Probability of response

- Blue box: Middle 50% of probabilities (interquartile range)

- Whiskers: Top and bottom 25% of probabilities

- Orange line: Median probability for that tier

- “Depth”: The vertical spread between tier 1 and tier 10 - greater depth indicates stronger model discrimination A flat or erratic PBT graph indicates a model problem requiring Data Science review. See also: Model Grade Report, IRG.

P2A3XGB Path2Response’s production XGBoost classification model for Path2Acquisition. The pipeline consists of six steps: Train Select, Train, Score Select, Score, Reports Select, Reports. Initial training uses 50,000+ variables with up to 110,000 households; the final scoring model uses only the top 300 most important variables to efficiently score 35+ million households. See also: XGBoost, Encoded Windowed Variables.

PDDM (Performance-Driven Direct Mail) Category of direct mail products that use real-time behavioral triggers (site visits, cart abandonment) to send personalized mail quickly. Path2Response’s product in this category is Path2Ignite.

PII (Personally Identifiable Information) Data that can identify an individual. Limited usage at Path2Response.

Pixel Image-based tracking mechanism for email and web.

Preselect Process defining the available universe of names for selection.

Presort Sorting mail by ZIP code/destination before presenting to USPS. Deeper presort levels (5-digit, carrier route) earn higher discounts.

Priority (List) In merge/purge, the ranking that determines which source “owns” a duplicate name. House file typically gets highest priority, followed by best-performing rented lists.

Programmatic Direct Mail Automated, trigger-based direct mail that responds to real-time events (site visits, cart abandonment). Also called triggered mail. See also PDDM, Path2Ignite.

Propensity A model type measuring likelihood to respond to a specific offer.

Post-Merge The phase after a client’s merge/purge process is complete. Balance Models operate in the post-merge position, providing incremental names after duplicates have been removed. Path2Response optimally provides names both pre-merge (standard Path2Acquisition) and post-merge (Balance Model). See also: Balance Model, Pre-Merge, Merge/Purge.

Pre-Merge The phase before a client’s merge/purge process, when list sources are assembled but not yet deduplicated. Standard Path2Acquisition orders are delivered pre-merge. Clients with strong pre-merge results are candidates for post-merge Balance Models. See also: Post-Merge, Balance Model, Merge/Purge.

Proxy Responders In modeling, a pool of responders from a cohort of similar titles used when the client’s own customer data is unavailable or insufficient for model training. Used in Path2Optimize when scoring client-provided files that lack transactional history. The proxy responders are drawn from titles in the same category as the client. See also: Path2Optimize, Responder.

Prospect A consumer who has not yet responded to a specific title.

Q

Quintiles Division of a scored audience into 5 equal groups ranked by predicted response. Similar to deciles but with 5 groups instead of 10. See also Deciles, Segments.

R

Recency In RFM analysis, how recently a consumer made a purchase.

Recommendation Guidance provided to clients for future mailings based on results analysis.

Responder A consumer who has previously responded to a specific title.

Responder Interval The time period during which responder data is collected for model training. Two types: Recency (most recent months with data) and Seasonal (same months from prior year, for seasonal products). Future Projections helps determine the optimal responder interval. See also: Future Projections, Prospect Range, windowedEndDate.

Response A consumer action (typically a purchase) resulting from a mailing.

Response Analysis Process of measuring and evaluating campaign performance.

Response List A mailing list of people who have taken an action (purchased, donated, subscribed). Higher quality and cost than compiled lists because it includes proven responders.

Response Rate

Retargeting Marketing to people who have previously interacted with a brand (visited website, abandoned cart, etc.) but did not convert. Direct mail retargeting sends physical mail to these visitors. See also PDDM, Triggered Mail.

Remarketing Often used interchangeably with retargeting. Refers to re-engaging consumers across channels (online and offline) after initial interaction. Percentage of mail recipients who responded (purchased).

Results Campaign performance data including response rates and financial metrics.

RFM (Recency, Frequency, Monetary) Fundamental framework for analyzing and predicting consumer behavior.

RR Index Response rate indexed against a baseline for comparison.

S

SCF (Sectional Center Facility) USPS facilities serving specific 3-digit ZIP code areas. SCF destination entry provides postal discounts (reduced in July 2025 but still significant).

Score File Output of modeling containing consumer scores and segments.

Seed Names Decoy addresses inserted into rented lists by list owners to verify one-time use compliance and monitor mail content/timing. Unauthorized re-use is detected when seeds receive duplicate mailings.

Segments Groups of consumers categorized by score ranges (deciles, quintiles, etc.).

Selects Additional targeting criteria applied to a mailing list (e.g., recency, geography, dollar amount). Each select typically adds $5-$25/M to base list price.

Service Bureau Third-party facility that produces and mails direct mail pieces. Services include merge/purge, printing, lettershop, and postal optimization.

SFTP Secure File Transfer Protocol - method for transferring house files and data.

SHA-1, SHA-2 Secure hashing algorithms used for creating hashed email identifiers.

Shiny Reports Legacy R-based reporting application. Fully replaced by DRAX. See DRAX.

Shipment Delivery of fulfillment file to the service bureau.

Sibling A related title within the same brand family.

Site Tag JavaScript tracking code placed on client websites to capture visitor behavior.

Site Visitors Aggregated data on website visitor behavior.

SPII (Sensitive PII) Highly sensitive personal data (SSN, credit card, passwords). NOT allowed at Path2Response.

Suppressions Categorical exclusions from mailings.

Swift Belardi Wong’s programmatic postcard marketing product. Uses SmartMatch (visitor identification) and SmartScore (predictive scoring). Path2Response provides the data layer for Swift.

Synergistic Titles Related titles whose responders are likely to respond well to a target title.

T

Triggered Mail Direct mail automatically sent based on a behavioral trigger (site visit, cart abandonment, inactivity). See also Programmatic Direct Mail, PDDM.

Title A specific catalog or mailing program (e.g., “McGuckin Hardware”).

Title Key Unique identifier linking transactions to specific titles.

Title Responders Consumers who have responded to a specific title.

Transaction A purchase record from a consumer.

Transaction Type In Data Acquisition, a classification applied to transactional records based on the nature of the action:

- purchase - Amount > 0; maps to PURCHASE for modeling

- donation - Charitable gift; maps to PURCHASE

- subscription - Advance payment for recurring services; maps to PURCHASE

- return - Amount < 0

- inquiry - Catalog request; amount = 0

- cancellation - Cancelled order

- pledge - Commitment to future donation

- shipment - Where items shipped (replaces deprecated “giftee”)

- unknown - Catch-all; should not be modeled

“The Subscription Line”: Netflix (monthly billing) is NOT a subscription; National Geographic (advance payment for recurring delivery) IS a subscription.

Transactions The collection of purchase records stored in the system.

U

Universe The total pool of potential mail recipients available for selection.

V

Variables Data points generated by BERT and used for modeling.

Verticals Industry categories served: Catalog, Nonprofit, DTC, Non Participating.

W

Windowed Variables (Simulation Variables)

Variables based on windowedEndDate that capture purchasing behavior within specific time windows: 30, 60, 90, 180, 365, 720, or 9999 days. Challenge: rarely make it into the final 300 model variables due to low density in smaller titles or time windows. Solution: Encoded Windowed Variables compress multiple windows into single dense variables. See also: Encoded Windowed Variables, windowedEndDate.

windowedEndDate The date that defines “current time” for model training, set to a date in the past. All windowed variables are calculated relative to this date. Critical rules: (1) must be the same for both prospects and responders; (2) Prospect End Date = windowedEndDate; (3) Prospect Start Date = 1 year before windowedEndDate; (4) Responder Start Date = 1 day after windowedEndDate. See also: Windowed Variables, Responder Interval, Prospect Range.

White Label A product or service produced by one company that other companies rebrand and sell as their own. Path2Ignite can be white-labeled by agencies, who manage the client relationship while Path2Response provides the data/audience layer invisibly.

Wiland Major data cooperative competitor headquartered in Niwot, CO. Known for 160B+ transactions, heavy ML/AI investment, and privacy-conscious approach (doesn’t sell raw consumer data).

Worksharing USPS term for mailers doing work (sorting, transporting, preparing) that USPS would otherwise perform. Worksharing earns postal discounts.

X

XGBoost (eXtreme Gradient Boosting) A gradient boosting framework using decision trees, and the core technology behind Path2Response’s P2A3XGB model for Path2Acquisition. Well-suited for: large datasets with many variables, binary classification (responder vs non-responder), handling missing values, and feature importance ranking. See also: P2A3XGB, Modeling, Variables.

$ Metrics

$ Per Book Revenue generated per catalog mailed.

$ / Book Index Revenue per book indexed against a baseline for comparison.

Related Documentation

- Solution Overview - Company and product overview

- Path2Acquisition Flow - Complete solution architecture

- Products & Services - Product portfolio details

- Data Acquisition Overview - Data Ops processes and SLAs

- Production Team Overview - Client Support Services processes

- Data Science Overview - Modeling and DS processes

- Industry Knowledge Base - Direct mail industry context

Path2Response Company Overview

Related Documentation:

- Solution Overview - Mission, core concepts, verticals

- Path2Acquisition Flow - How the core product works

- Glossary - Term definitions

Company Identity

Official Name: Path2Response, LLC Parent Company: Caveance (holding company) Taglines: Marketing updates taglines regularly; recent examples include “Marketing, Reimagined” and “Innovation and Data Drive Results” Industry: Marketing Data Cooperative / Data Broker Founded: ~2015 (celebrating 10-year anniversary in 2025)

Mission & Vision

Path2Response was founded on the principle that “There has to be a better way” for how data cooperatives operate. The company focuses on creating marketing audiences using multichannel datasets combined with advanced machine learning.

Core Mission: Help clients reach their ideal campaign audiences using real-world consumer spending behavior data.

Location

Path2Response is a fully remote company with employees distributed across ~15 US states.

Corporate Address (legal/mail only): 390 Interlocken Crescent, Suite 350 Broomfield, CO 80021

Contact:

- Phone: (303) 927-7733

- Email: inquiries@path2response.com

- LinkedIn: https://www.linkedin.com/company/path2response-llc/

Key Differentiators

Multichannel Dataset

Integrates online and offline transaction and behavioral data to identify signals competitors miss.

Machine Learning Approach

Uses a single configurable model with thousands of iterations based on billions of data points, emphasizing automated data processing rather than manual methods.

Performance Focus

97% client retention rate with 20-60% performance lift for campaigns using their digital data.

Data Freshness

Daily updates to their database, with weekly updates to individual-level purchase transaction records.

Company Scale

- Data Volume: Billions of individual-level purchase transaction records

- Digital Signals: 5B+ unique site visits daily

- Coverage: Nationwide consumer spend behavior across 40+ product categories

- Update Frequency: Data updated weekly; digital behavior signals daily

Regulatory Status

Path2Response is registered as a data broker under Texas law with the Texas Secretary of State.

Industry Recognition

- 97% client retention rate

- 30+ years of collective leadership experience in cooperative database marketing

- Industry pioneers who “launched the marketing cooperative data industry”

Path2Response Products & Services

Product Portfolio Overview

Path2Response offers a suite of data-driven marketing solutions built on their cooperative database of billions of consumer transaction records.

For technical understanding: See Path2Acquisition Flow for how the core product works, and Glossary for term definitions.

Core Products

Path2Acquisition

What It Is: Custom audience creation solution for new customer acquisition.

How It Works:

- Leverages billions of individual-level consumer purchase behaviors

- Creates thousands of model iterations to identify optimal performance and maximum scale

- Delivers custom audiences within hours (not days or weeks)

- Combines online and offline spending patterns

Key Features:

- Custom audiences tailored to client goals and online signals

- Machine learning reduces human bias

- Multichannel data at scale

- Results transparency before launch

Use Cases: Direct mail campaigns, display advertising, multichannel marketing

Path2Ignite (Performance Driven Direct Mail)

What It Is: Performance-driven direct mail (PDDM) solution that sends postal mail to recent website visitors—both known customers/donors and anonymous visitors—combining first-party co-op data with site visitor intent signals.

Core Concept: PDDM is automated “trigger” or “programmatic” mail deployed as close to real-time as possible. Daily mailings maximize recency as a performance driver. Typically uses cost-efficient formats (postcards, trifolds) that are quick to print.

Product Variants

| Product | Description | Data Source |

|---|---|---|

| Path2Ignite | Target most recent site visitors (housefile + prospects) daily | Site visitor-based |

| Path2Ignite Boost | Engage top prospects daily with fresh modeled audiences | Model-based (Path2Acquisition model rebuilt weekly) |

| Path2Ignite Ultimate | Combination of both for maximum daily growth | Site visitors + modeled prospects |

How It Works

Path2Ignite (Site Visitor-Based):

- Day Zero: Client’s site visitors captured via tags; data feed received by Path2Response

- Next Day: Site visitors matched and optimized with proprietary scoring algorithm

- Fulfillment: Audience fulfilled to printer/mailer

- Mail: Deployed into USPS mail stream

Path2Ignite Boost (Model-Based):

- Client’s Path2Acquisition model custom-built with most recent data (rebuilt weekly)

- Top modeled prospect audiences fulfilled to printer daily

- Audiences are unique from/incremental to site visitor-based audiences

Intent Signals Used

- Cart abandonment

- Pages visited

- Order placed/amount

- Session-specific behavior

- Co-op transaction data matching

Use Cases

New Customer/Donor Acquisition:

- Reach anonymous site visitors through a unique multichannel touchpoint

- PDDM as part of integrated acquisition strategy

Existing Customer/Donor Engagement:

- Active customers: Increase retention, drive repeat transactions, boost LTV

- Lapsed customers: Reactivate—a recent website visit means they’re “raising their hands”

Proven Results (vs. Holdout Panels)

| Segment | Vertical | $/Piece Index |

|---|---|---|

| Housefile | Home/Office Retail | 279 |

| Housefile | Footwear | 269 |

| Housefile | Furniture | 689 |

| Prospects | Men’s Apparel | 137 |

| Prospects | General Merchandise | 195 |

| Prospects | Home Décor | 180 |

Go-to-Market Models

White-Label (for Agencies):

- Agency manages order process, creative, printer selection

- Path2Response provides data/audiences invisibly

- Agency owns client relationship

Data Provider (for Agency Partners like Belardi Wong):

- Path2Response provides data layer for agency’s branded product (e.g., Swift)

- Path2Response not visible to end client

Implementation Process

- Agency Setup: White-labels Path2Ignite; manages order process, sends customer file, owns creative and printer selection

- Privacy Review: Path2Response reviews privacy policy, sends tag pixel credentials, assists with pixel setup

- Data Onboarding: Client provides customer housefile and sends weekly customer/transaction/suppression files

- Audience Build: Path2Response builds, fulfills, and ships Path2Ignite and Boost names to printer

- Production: Printer prints and mails postcards within 48 hours

- Analytics: Path2Response runs Response Analysis and provides regular updates

Path2Response Responsibilities

- Tag and site visitor identification

- Model, score, rank

- List ops: test & holdout panels, fulfillment

- Audience strategy, optimization, best practices

- Analytics and results reporting

Agency Partner Responsibilities

- Creative for PDDM piece

- PDDM-capable printer selection

- Strategy/support for client

- Sell Path2Ignite to clients/prospects

Co-op Participation Impact

- Co-op participants: Signature scoring algorithm + custom acquisition model

- Non-participants: Signature scoring algorithm only

Market Context

- PDDM market spend: $317MM (2022), growing at 27.4% CAGR

- Projected: $523MM by 2025

- Represents ~1.3% of total direct mail spend and rising

Digital Audiences

What It Is: 300+ unique audience segments powered by first-party transaction data for digital marketing campaigns.

Audience Categories:

| Category | Details |

|---|---|

| Behavioral | In-market audiences with 30, 60, 90-day activity windows across 40+ segments; 35+ buyer categories by product type |

| Demographic | Verified buyer demographics and lifestyle personas |

| Intent-Based | Buying propensities by category; seasonal and holiday buyer segments |

| Super Buyers | Segmented by recency, frequency, and spending |

| Donor Segments | 19 donor categories for nonprofit use |

| Custom | Custom audience creation available |

Data Foundation:

- Billions of individual purchase transaction records

- Daily updates tracking digital behavior signals

- 5+ billion unique site visits

- Nationwide spending data across 40+ product categories

Path2Contact (Reverse Email Append)

What It Is: Transforms anonymous email addresses into actionable customer data with postal addresses and responsiveness rankings.

Process:

- Upload: Submit email addresses with missing contact details

- Enrichment: Match against consumer database to add names and postal addresses

- Ranking: Proprietary response rankings based on actual purchase behavior

Key Capabilities:

- Converts “mystery emails” into complete records

- Behavioral scoring based on real-world purchase patterns

- Enables multichannel engagement (email + direct mail)

Use Cases:

- Nonprofits: Connect donors across multiple channels

- Retailers: Engage online shoppers with offline campaigns

- Agencies: Enhance client campaign effectiveness

Path2Optimize (Data Optimization)

What It Is: Data enrichment service that scores and optimizes client-provided files with minimal transactional data using P2A3XGB modeling. Available for Contributing and non-Participating brands.

Accepts:

- External compiled files and third-party lists

- Lead generation data (contests, sweeps, info requests)

- Consumer contact information from retail locations

- Lapsed customer files

- Cross-sell files from other titles within parent company

- Housefile portions needing optimization

How It Works:

- P2R receives client-provided file

- Records matched to P2R database (“Gross → Net” — match rate varies by file quality)

- Net records become scoring universe

- P2A3XGB model scores using client responders (if available) or proxy responders from similar category

- Output: Optimized name/address, original client ID (June 2025+), segment/tier indicator

Delivers:

- Complete “best name” and postal data (optimal individual for direct response in household)

- P2A3XGB model scoring ranking consumers by response likelihood

- Tiered rankings for targeting optimization

- Client-provided ID returned with fulfillment (CustID, DonorID)

Proven Results:

- 100% performance improvement for outdoor apparel retailer

- 60% response rate lift over other providers for home furnishings

- 127% average gift increase for education nonprofit

Path2Expand (P2EX)

What It Is: Product providing incremental prospect names beyond the primary Path2Acquisition universe. Extends reach when clients need more names than their P2A segment delivers.

Key Constraint: Cannot run standalone — Path2Acquisition must always run first in the order.

How It Works:

- Run Path2Acquisition model normally (MPT, 5% counts, front-end omits)

- Dummy fulfill/ship P2A names to exclude from P2EX universe

- Clone P2A model for P2EX (same parameters, only add ImportID to Prospect/Score Excludes)

- P2EX delivers additional names beyond P2A segment

- When fulfilling both, P2A is Fulfillment Priority #1

Use Case: Client orders 250K from P2A model, but segment size is only 100K. Path2Expand provides additional incremental names beyond the P2A universe.

Comparison with Balance Model:

- Balance Model: Post-merge solution (after client’s merge/purge)

- Path2Expand: Pre-merge extension (before client’s merge/purge, same order as P2A)

Path2Linkage (Data Partnerships)

What It Is: “The ultimate source of truth for transaction-based US consumer IDs” - enabling data partnership arrangements.

Coverage & Scale:

- Nearly every U.S. household

- Hundreds of millions of individual consumer records

- Updated 5 times weekly

Data Characteristics:

- Built on first-party (Name & Address) data linked to transaction history

- Excludes unverified third-party data sources

- Compliant with federal and state privacy legislation

Companion Product - Path2InMarket:

- Individual-level transaction data with recency indicators

- Target “in-market active spenders” in 10+ categories

- Transaction recency flags and spend indices

Reactivation Services

What It Is: Reactivates lapsed buyers and donors through targeted outreach.

Capabilities:

- Identifies previously engaged customers for re-engagement

- Targets multichannel donor behavior

- Leverages actual giving data updated weekly

Data Foundation

All products are built on Path2Response’s cooperative database:

| Metric | Value |

|---|---|

| Transaction Records | Billions (individual-level) |

| Site Visits | 5B+ unique daily |

| Update Frequency | Weekly (transactions), Daily (digital signals) |

| Product Categories | 40+ |

| Geographic Coverage | Nationwide (US) |

| Household Coverage | Nearly every US household |

Delivery & Integration

- Turnaround: Custom audiences delivered within hours

- Channels: Direct mail, digital display, mobile, Connected TV

- Formats: Multiple delivery formats for campaign deployment

Path2Response Technology & Data

Data Cooperative Model

What is a Data Cooperative?

Path2Response operates as a marketing data cooperative where member organizations (retailers, nonprofits, catalogs) contribute customer data in exchange for access to other consumers’ information. This creates a shared pool of transaction data that benefits all members.

How It Works

- Members contribute their customer transaction data to the cooperative

- Path2Response aggregates data across all members

- Machine learning models identify patterns and build audiences

- Members access audiences of consumers who have demonstrated relevant behaviors

Data Assets

Transaction Data

| Metric | Value |

|---|---|

| Volume | Billions of individual-level purchase transaction records |

| Update Frequency | Weekly |

| Coverage | Nationwide (US) |

| Household Reach | Nearly every US household |

| Categories | 40+ product categories |

Digital Behavior Signals

| Metric | Value |

|---|---|

| Site Visits | 5B+ unique visits daily |

| Update Frequency | Daily |

| Signal Types | Cart abandonment, pages visited, purchase history, browse behavior |

Consumer Records

| Metric | Value |

|---|---|

| Individual Records | Hundreds of millions |

| Data Updates | 5 times weekly (Path2Linkage) |

| Identity Foundation | First-party (Name & Address) linked to transaction history |

Data Sources

Primary Sources

- Cooperative Database: Member-contributed customer transaction data

- Website Tracking: Cookies and similar technologies capturing browsing behavior

- Digital Activity: Online behavior signals and site visitation data

Secondary Sources

- Public records

- Census data

- Telephone directories

- Licensed third-party data

- Online activity providers

Technology Platform

Machine Learning Approach

Path2Response emphasizes automated, ML-driven data processing:

- Single Configurable Model: One model architecture with thousands of iterations

- Scale: Processes billions of data points per model run

- Speed: “Thousands of iterations in a single day”

- Automation: Reduces manual methods and human bias

- Optimization: Identifies optimal performance and maximum scale

Key Technical Capabilities

| Capability | Description |

|---|---|

| Audience Delivery | Custom audiences delivered within hours |

| Site Visitor ID | 24-48 hour turnaround from identification to fulfillment |

| Recency Tracking | Identifies active shoppers within 24-48 hours of last purchase |

| Segment Library | 300+ unique audience segments |

| Activity Windows | 30, 60, 90-day behavioral windows |

Data Processing Pipeline

- Ingest: Daily digital signals, weekly transaction data

- Match: Link records across sources using first-party identifiers

- Model: Run ML iterations to build optimized audiences

- Score: Rank consumers by response likelihood / direct response-readiness

- Deliver: Provide audiences for campaign deployment

Data Quality & Differentiation

First-Party Focus

Path2Response emphasizes using verified, transaction-based data:

“Actual, verified buying behavior—not ‘modeled’ intent or stagnant surveys”

“Proven shoppers, not ‘ghost’ leads”

Data Freshness

- Transaction records updated weekly

- Digital behavior signals updated daily

- Site visitor identification within 24-48 hours

Exclusions

Path2Linkage explicitly excludes unverified third-party data sources to maintain data quality.

Privacy & Compliance

Regulatory Status

- Registered as a data broker under Texas law with the Texas Secretary of State

- SOC-2 certified (mentioned in nonprofit marketing materials)

Consumer Privacy Rights

| Right | Mechanism |

|---|---|

| Opt-Out of Data Services | Privacy portal or phone: (888) 914-9661, PIN 625 484 |

| Opt-Out of Marketing Emails | Unsubscribe links |

| Cookie Management | Browser settings |

| California Rights | Opt-out of sales/sharing |

| Virginia/Connecticut Rights | Access, delete, correct |

| Nevada Rights | Opt-out of “sales” |

Data Retention

“We will retain personal information for as long as necessary to fulfill the purpose of collection” including legal requirements and fraud prevention.

Compliance Framework

Path2Linkage is described as “compliant with federal and state privacy legislation, including disclosure, consent, and opt-in requirements.”

Integration Channels

Audiences can be deployed across:

- Direct mail

- Digital display advertising

- Mobile advertising

- Connected TV (CTV)

- Multi-channel campaigns

Path2Response Team & Leadership

Source: 2025-Q4 EOQ Organization Chart (page 51) Last Updated: January 2026

Executive Team

| Name | Title | Direct Reports |

|---|---|---|

| Brian Rainey | Chief Executive Officer | Phil Hoey, Chris McDonald, Michael Pelikan, Karin Eisenmenger, Curt Blattner |

| Phil Hoey | Chief Financial Officer | Finance, HR, Legal |

| Chris McDonald | CRO / President | Sales, Client Partners, Marketing |

| Michael Pelikan | Chief Technology Officer | Engineering, Data Science |

| Karin Eisenmenger | Chief Operating Officer | Operations, Data Acquisition, Client Support, Product Management |

| Curt Blattner | VP Digital Strategy | Digital Agency |

Organization by Department

Finance & Administration (Phil Hoey, CFO)

| Name | Title |

|---|---|

| Bill Wallace | Controller |

| Mary Russell Bauson | VP Human Resources |

| Tom Besore | General Counsel |

| Marissa Monnett | AP and Billing Manager |

| Sierra Bryan | Admin. Assistant |

Sales & Client Development (Chris McDonald, CRO/President)

Client Partners

| Name | Title |

|---|---|

| Bill Gerstner | VP Client Partner |

| Kathy Huettl | SVP, Client Partner |

| Michael Roots | Sr Client Partner |

| Robin Lebo | Client Partner |

| Lauryn O’Connor | DV Client Partner |

| Lindsay Asselmeyer | Client Partner |

Client Development

| Name | Title |

|---|---|

| Bruce Hemmer | VP, Regional Client Development |

| Niki Davis | VP - Data Partnerships & Client Development |

| Paula Jeske | VP - Client Development |

| Jacky Jones | CTO (Client Development) |

| Open Position | Director, Client Development |

Marketing

| Name | Title |

|---|---|

| Amye King | Marketing Director |

Technology (Michael Pelikan, CTO)

Engineering (John Malone, Director of Engineering)

| Name | Title |

|---|---|

| John Malone | Director of Engineering |

| Jason Smith | Senior Data Engineer |

| Chip Houk | Senior Software Engineer |

| David Fuller | Senior Software Engineer |

| Wes Hofmann | Infrastructure Engineer |

| Alexa Green | Independent Contractor |

Data Science (Igor Oliynyk, Director of Data Science)

| Name | Title |

|---|---|

| Igor Oliynyk | Director of Data Science |

| Morgan Ford | Data Scientist |

| Erica Yang | Data Scientist |

| Paul Martin | Data Scientist |

Operations (Karin Eisenmenger, COO)

Data Acquisition

| Name | Title |

|---|---|

| Rick Ramirez | Senior Manager, Data Acquisition |

| Brian Tamura | Sr. Data Acquisition |

| Megan Fuller | Data Acquisition |

| Stephanie Evans | Data Acquisition |

| Olivia Cepras-McLean | Data Acquisition Specialist |

Client Support

| Name | Title |

|---|---|

| Jeanne Obermeier | Manager, Client Support Services |

| Char Barnett | Sr. Client Support Specialist |

| Vanessa Peyton | Client Support |

| Jami Bayne | Client Support Specialist |

Performance & Results

| Name | Title |

|---|---|

| Chad Strong | Performance & Results Analyst |

Product Management

| Name | Title |

|---|---|

| Vacant | VP, Product Management |

Note: Andrea Gioia held this role briefly (~50 days) after Wells Spence departed. Position currently unfilled (as of January 2026).

New Initiatives

| Name | Title |

|---|---|

| Greg Mitchell | Mgr, Improvement & New Initiatives Director |

| Danny DeVinney | New Initiative Production Specialist |

| Reisam Amjad | New Initiative Production Specialist |

Digital Strategy (Curt Blattner, VP Digital Strategy)

| Name | Title |

|---|---|

| Miranda Graham | Digital Agency Specialist |

Key Contacts by Function

| Function | Primary Contact | Title |

|---|---|---|

| Engineering | John Malone | Director of Engineering |

| Data Science | Igor Oliynyk | Director of Data Science |

| Client Partners | Bill Gerstner | VP Client Partner |

| Client Development | Paula Jeske | VP - Client Development |

| Data Partnerships | Niki Davis | VP - Data Partnerships & Client Development |

| Operations | Karin Eisenmenger | COO |

| Product Management | Vacant | VP, Product Management |

| Client Support | Jeanne Obermeier | Manager, Client Support Services |

| Data Acquisition | Rick Ramirez | Senior Manager, Data Acquisition |

| HR | Mary Russell Bauson | VP Human Resources |

| Legal | Tom Besore | General Counsel |

| Finance | Bill Wallace | Controller |

| Marketing | Amye King | Marketing Director |

| Digital Strategy | Curt Blattner | VP Digital Strategy |

Team Size Summary

| Department | Headcount |

|---|---|

| Executive | 6 |

| Finance & Administration | 5 |

| Sales & Client Development | 12 (1 vacant) |

| Engineering | 6 |

| Data Science | 4 |

| Operations (total) | 12 (1 vacant: VP PM) |

| Digital Strategy | 2 |

| Total | ~48 |

Location

Path2Response is a fully remote company. Employees are distributed across ~15 US states.

Corporate Address (legal/mail only): 390 Interlocken Crescent, Suite 350 Broomfield, CO 80021

Related Documentation

- CLAUDE.md - Development team details

- Data Science Overview - DS team and processes

- PROD-PATH Process - How PROD and PATH teams interact

Path2Response Markets & Clients

Target Market Segments

Primary Customer Types

| Segment | Description |

|---|---|

| Marketers & Growth Strategists | Brand marketers focused on expanding reach and impact |

| Agency Growth Strategists | Agencies delivering enhanced client results |

| Fundraising Leaders | Nonprofit directors focused on donor acquisition |

Industries Served

Retail & E-Commerce